Deep Learning with Python(1), An Example of Neural Network

I have read the book ‘Deep Learning with Python’ and found it to be very practical and easy to read. There are simple and effective examples that help me understand neural networks. After reading these examples, neural networks became much easier to understand.

Let’s look at a concrete example of a neural network that uses the Python library Keras to learn to classify handwritten digits. Keras is an open-source library that provides a Python interface for artificial neural networks. While TensorFlow and PyTorch library are powerful AI libraries, Keras stands out for its simplicity and beginner-friendly design, making it often easier to learn initially.

Simple Classification Problem

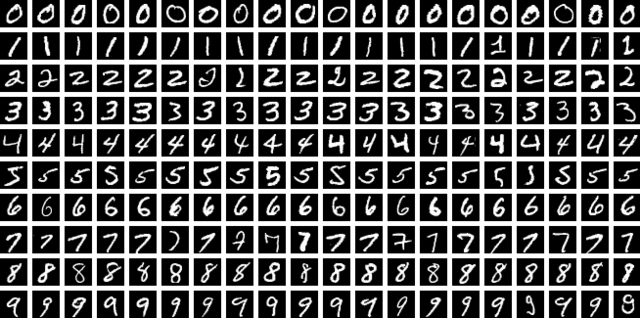

The problem we’re trying to solve here is to classify grayscale images of handwritten digits (28 × 28 pixels) into their 10 categories (0 through 9). We’ll use the MNIST dataset, which is a large database of handwritten digits that is commonly used for training various image processing systems.

You can think of “solving” MNIST as the “Hello World” of deep learning—it’s what you do to verify that your algorithms are working as expected.

Load the MNIST Dataset

The MNIST dataset comes preloaded in Keras, in the form of a set of four NumPy arrays. You can load the MNIST dataset using the following code:

# Imports the mnist module from the tensorflow.keras.datasets library. This module provides functions for loading the MNIST dataset.

from tensorflow.keras.datasets import mnist

# Calls the load_data() function from the mnist module. This function returns two tuples

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()The first tuple (train_images, train_labels) contains the training data and labels.

train_images: This is a NumPy array containing the training images. The images are represented as 3D arrays of shape(num_samples, 28, 28), wherenum_samplesis the number of training images (usually 60,000), and28x28is the size of each image in pixels.train_labels: This is a NumPy array containing the corresponding labels for the training images. The labels are integers from 0 to 9, representing the digit depicted in the image.

The second tuple (test_images, test_labels) contains the testing data and labels, structured similarly to the training data.

test_images: NumPy array of test images (shape(num_samples, 28, 28), usually 10,000 samples).test_labels: NumPy array of test labels.

Now you have four NumPy arrays: train_images, train_labels, test_images, and test_labels ready to be used for training and evaluating a machine learning model for handwritten digit classification.

Build the Neural Network

There are two common ways to build a neural network in Keras:

- Sequential API (Good for simple models)

- Functional API (More flexible for complex models)

For simplicity, we use the Sequential API to build a sequential model and add two layers to this model. In this example, we’re creating a simple neural network for a classification task, using the Sequential API to add two dense layers. The first layer has 512 neurons with ReLU activation, and the second layer has 10 neurons with softmax activation, suitable for multi-class classification problems.

from tensorflow import keras

from tensorflow.keras import layers

# Creates a sequential model, which means the layers are stacked linearly.

model = keras.Sequential([

# Adds a densely connected layer with 512 neurons and ReLU activation.

layers.Dense(512, activation="relu"),

# Adds another densely connected layer with 10 neurons and softmax activation.

layers.Dense(10, activation="softmax")

])Here, our model consists of a sequence of two Dense layers, which are densely connected (also called fully connected) neural layers. The second (and last) layer is a 10-way softmax classification layer, which means it will return an array of 10 probability scores (summing to 1). Each score will be the probability that the current digit image belongs to one of our 10 digit classes.

To make the model ready for training, we need to pick three more things as part of the compilation step:

- An optimizer—The mechanism through which the model will update itself based on the training data it sees, so as to improve its performance.

- A loss function—How the model will be able to measure its performance on the training data, and thus how it will be able to steer itself in the right direction.

- Metrics to monitor during training and testing—Here, we’ll only care about accuracy (the fraction of the images that were correctly classified).

We can use the compile method to prepare the model for training. It sets up the optimizer, loss function, and metrics that will be used during the training process.

model.compile(optimizer="rmsprop",

loss="sparse_categorical_crossentropy",

metrics=["accuracy"])Training the Sequence Model

Before training, we’ll preprocess the data by reshaping it into the shape the model expects and scaling it so that all values are in the [0, 1] interval. Previously, our training images were stored in an array of shape (60000, 28, 28) of type uint8 with values in the [0, 255] interval. We’ll transform it into a float32 array of shape (60000, 28 * 28) with values between 0 and 1.

train_images = train_images.reshape((60000, 28 * 28))

train_images = train_images.astype("float32") / 255

test_images = test_images.reshape((10000, 28 * 28))

test_images = test_images.astype("float32") / 255(batch_size, input_size), Convolutional Neural Networks (CNNs) has input shape: (batch_size, height, width, channels).We’re now ready to train the model, which in Keras is done via a call to the model’s fit() method—we fit the model to its training data.

model.fit(train_images, train_labels, epochs=5, batch_size=128)epochs=5 means the model will iterate through the training data five times. Training for more epochs can sometimes improve performance, but it can also lead to overfitting (where the model performs well on the training data but poorly on unseen data). batch_size=128 means the model will process 128 training samples at a time before updating its weights.

Evaluate the Sequence Model

How good is our trained model? Evaluating a Keras Sequential model involves assessing its performance on a test dataset after training. Here’s how you can do it:

test_loss, test_acc = model.evaluate(test_images, test_labels)

print(f"test_acc: {test_acc}")

Out[]: test_acc: 0.9785Make a Prediction

Now that we have a trained model with acceptable accuracy, we can use it to predict class probabilities for new digits from the test set.

test_digits = test_images[0:10]

predictions = model.predict(test_digits)

predictions[0]

Out[]:

array([7.6465142e-08, 3.1788210e-09, 3.8865232e-06, 3.3883782e-05,

7.0643326e-12, 4.8925646e-08, 5.2285810e-12, 9.9996167e-01,

1.8831970e-07, 2.8116719e-07], dtype=float32)Each number of index I in that array corresponds to the probability that digit image test_digits[0] belongs to class I. This first test digit has the highest probability score (0.99999106, almost 1) at index 7, so according to our model, it must be a 7:

predictions[0].argmax()

Out[]: 7